The videos and audio clips you encounter online may not always be genuine. That familiar voice on a video call or viral clip of a public figure might be entirely fabricated. These are deepfakes; synthetic media created by artificial intelligence to mimic real people with startling accuracy.

Deepfake videos and audio content are becoming more advanced, more realistic, and harder to spot and stop. To make matters worse, they can be easily created in hours using widely available tools, and are already being used to impersonate executives, spread fake news, and scam customers.

As AI-generated synthetic media reaches near-perfect realism, understanding how to spot deepfakes has become vital.

StayModern explores how deepfakes are being used and how you can identify them to protect yourself, your organization, and your community from synthetic deception before it causes lasting damage.

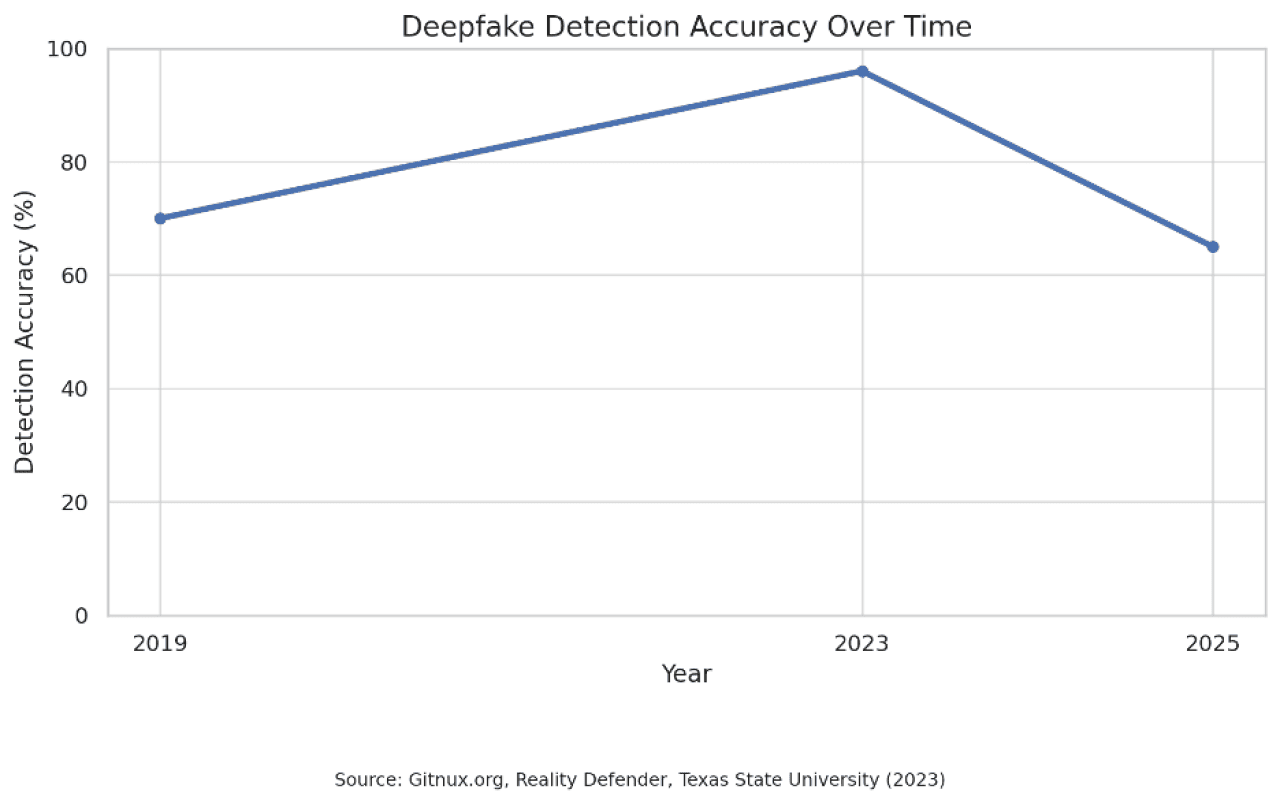

Deepfake detection technology has made impressive progress in recent years, but it's falling behind as AI development accelerates.

In 2023, leading detection systems could identify deepfakes with up to 98% accuracy. By 2025, that number has dropped to 65% as creators use adversarial methods to bypass detection. These tools are learning to mimic human behavior more convincingly, making it harder for even advanced algorithms to flag fake content.

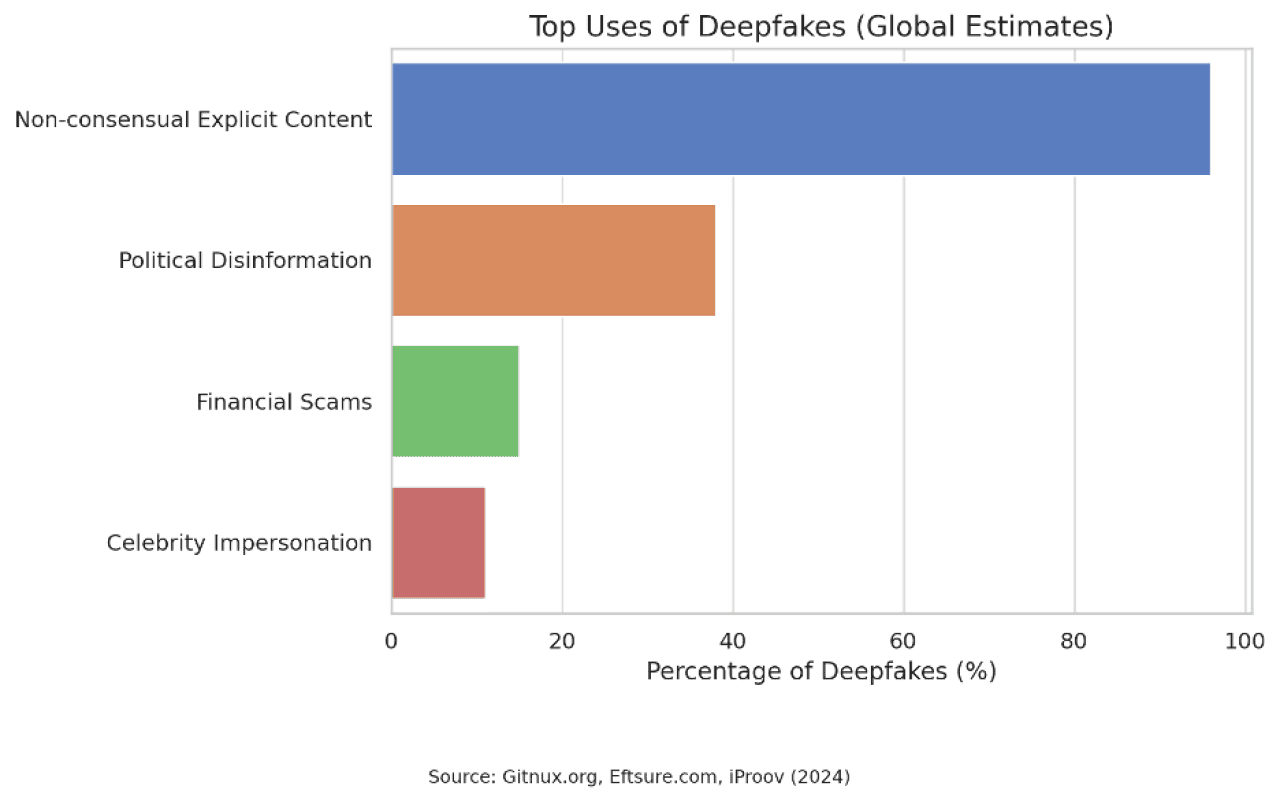

Deepfake technology has spread across industries and platforms at an alarming rate. While some creators use it for legitimate entertainment or artistic purposes, most target victims for harmful purposes.

The following stats show how video and audio deepfakes are being used today:

These numbers clearly show that the main purposes of deepfakes are to deceive, manipulate, and cause harm.

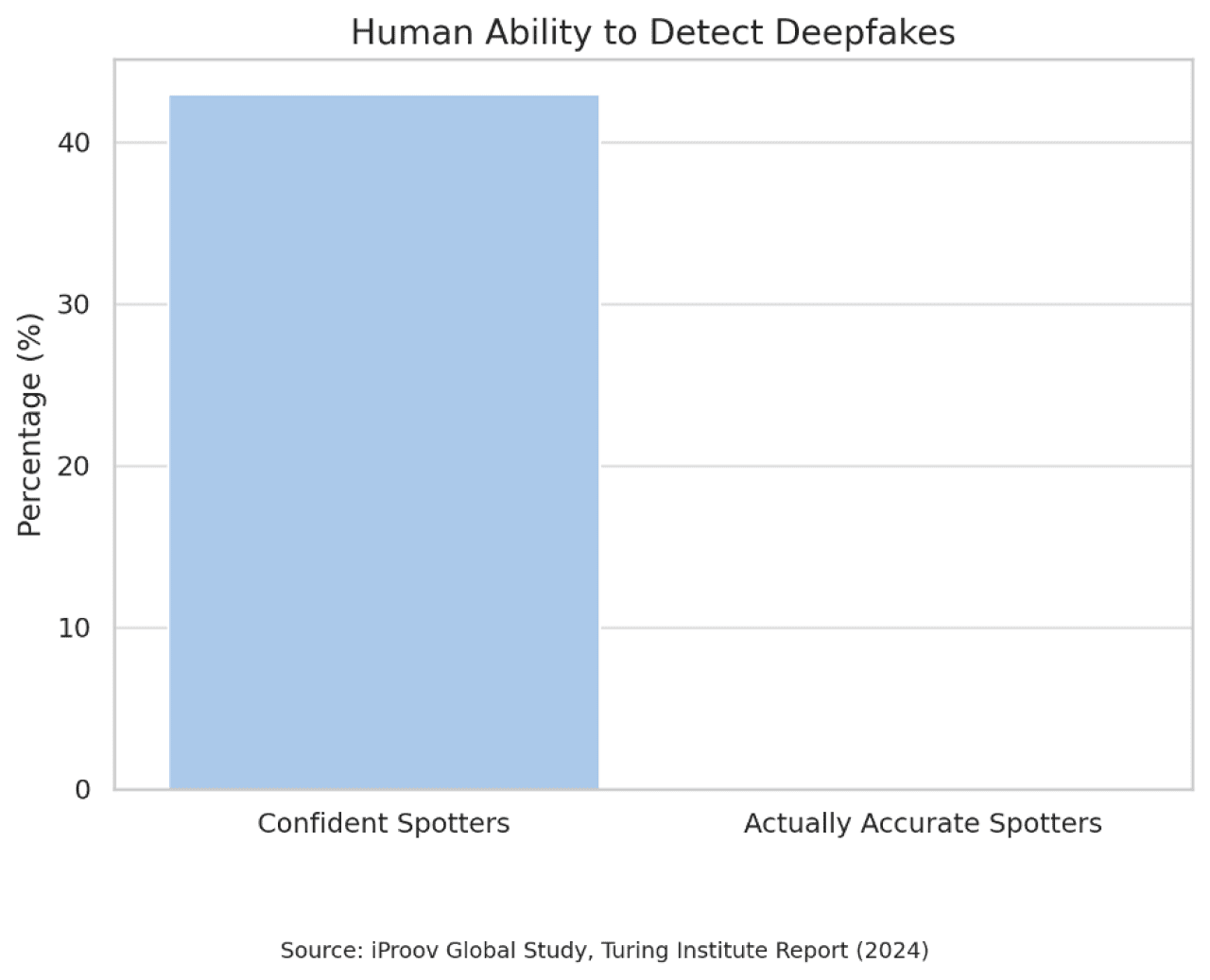

Even experienced professionals are vulnerable to high quality deepfakes. As synthetic media becomes more realistic, spotting it with the naked eye is harder than most people think.

A 2024 study from iProov revealed a significant gap between confidence and accuracy in detecting deepfakes. While 60% of respondents claimed they were confident in their ability to identify deepfakes, the reality was starkly different.

In practice, only 0.1% of participants accurately distinguished deepfakes from real images, videos, and audio content. In other words, those who felt the most certain about their detection skills were often the most easily deceived.

This gap between confidence and accuracy shows how convincing deepfakes have become, and why relying on human judgment alone is no longer enough.

With human detection so unreliable, spotting deepfakes requires careful observation. While these signs may become harder to notice as technology improves, they remain useful for identifying many fakes today.

Unnatural blinking or blank stares

The way a person blinks in deepfakes is either too frequent or too slow. Sometimes they don’t blink at all. This is because blinking patterns are hard to replicate accurately with current models.

Slight mismatch between audio and lip movement

When the person moves their mouth, the timing of the lips may not fully match the audio. This is a common giveaway in lower-quality fakes.

Warped earrings, glasses, or shadows

Look for visual distortions around accessories or lighting. In high-quality deepfakes, these artifacts are reduced, but many videos still show warped jewelry or inconsistent shadows.

Jerky neck or jaw movement

The way someone tilts their head or moves their jaw can feel robotic. This is because deepfakes sometimes struggle to model natural physics, especially when the person moves quickly or unpredictably.

Suspicious source, missing metadata

A real video typically comes from a trusted source and includes metadata like time, date, and device info. AI-generated videos often lack this data or show signs of editing.

Facial hair transformations

Pay attention to inconsistent or changing facial hair transformations. If a beard or mustache seems to flicker, change shape, or disappear between frames, it’s likely a fake. Other facial transformations to watch out for include sudden smoothing of wrinkles, shifting jawlines, or mismatched facial symmetry.

Too much glare

Shiny foreheads, overly reflective glasses, or plastic-looking skin are common problems in synthetic rendering, even in high-end deepfake manipulations.

Unnaturally smooth skin

While deepfake skin looks similar to the real deal, it may appear too smooth, flat, or uniformly lit. Human skin naturally has pores, facial moles, blemishes, and subtle shadows that are hard to fake.

Note: Learning how to spot deepfakes has never been more important. While none of these signs are absolute proof, spotting two or more should raise red flags.

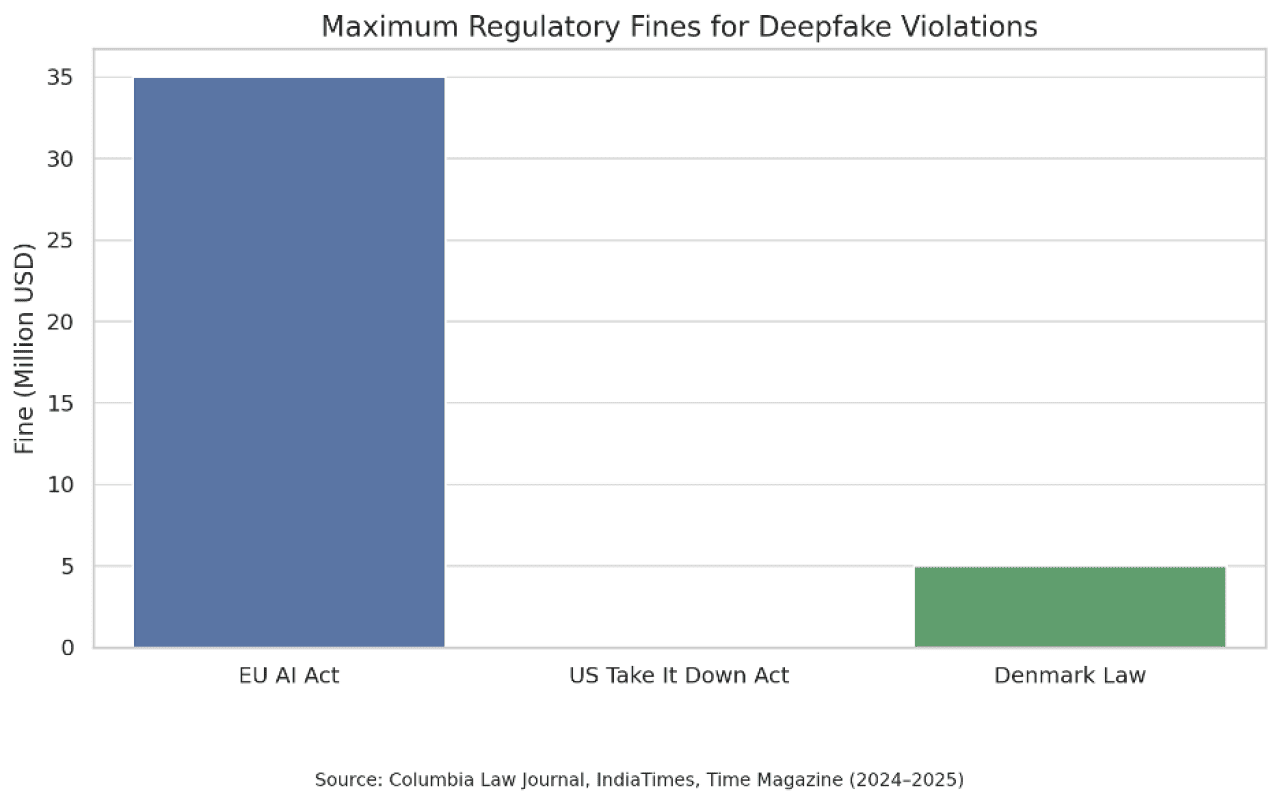

Governments worldwide are starting to respond, but the efforts vary widely in scope, funding, and enforcement. Here's a look at how key regions are responding:

These laws are a step forward, but legal systems are struggling to keep up with how rapidly deepfake technology is evolving.

Deepfakes are already creating real-world harm across industries and communities:

These consequences affect real people today, not in some distant future. From boardroom fraud to bedroom violations, deepfakes create tangible harm that demands immediate protective action.

While regulation and detection tech continue to evolve, there are steps you can take today to protect yourself and others.

For businesses:

For individuals:

Deepfakes aren’t just a problem for celebrities or politicians. They’re a threat to everyone. They undermine public trust, damage brand reputations, and can lead to serious financial and personal harm.

They are already being used to manipulate elections, commit fraud, and humiliate people for clicks. The only way to stay ahead is through awareness, education, and proactive defense.

Synthetic media is already here, and it's only getting better at fooling people. Whether you are a business leader, content creator, or just trying to figure out what is real, knowing how to detect deepfakes matters now more than ever.

This story was produced by StayModern and reviewed and distributed by Stacker.

Reader Comments(0)